Here at Perforce we’ve recently started using container technology and we love the efficiencies and scalability they help us achieve: it’s like being given a particularly sophisticated and beautiful version of Lego. However, when using container technology – particularly to support DevOps and software production – then issues such as capacity planning, network configurations and security are all areas that need careful consideration. For instance, containers can create heavy demands on the network. Often, new skills and tools are going to be needed.

This is particularly true in large-scale DevOps projects, increasingly found not just in big enterprises, but in projects that involve large volumes of digital assets, such as game development or IoT. Needing to provide the high level of productivity in what are fast becoming ‘software factories’ brings challenges on a whole new level when compared to the smaller DevOps projects that early adopters have pioneered.

Understanding containers

Before we dig deeper into the detail, here is an explanation of containers for the uninitiated. Containers can lower costs by optimizing resources, standardizing environments and reducing time to market. They do this by enclosing software and its dependencies in neat little packages that can be deployed anywhere, whether in the cloud, in an enterprise’s data centre, or on an individual’s workstations. In the same way that virtual machines (VMs) made hardware and operating systems easier to manage and scale, the same is largely true for containers.

The result is that organisations can achieve better return on investment in hardware, operating systems, or cloud services. For instance, there is no need for application-specific VM configurations, or to scale up or add more VMs (all of which adds time). Instead, more containers can be deployed in seconds, which is particularly useful during spikes of traffic.

Security benefits come with the short-lived, on-demand model nature of containers, which are often used where a service is started on demand – in response to a request, or to serve peaks in activity or web traffic – and are destroyed afterwards. So, if an attack does happen, the window of opportunity to do damage is minimized. Plus, it is also far more difficult for an attack to install malware that survives after container destruction.

In relation to DevOps

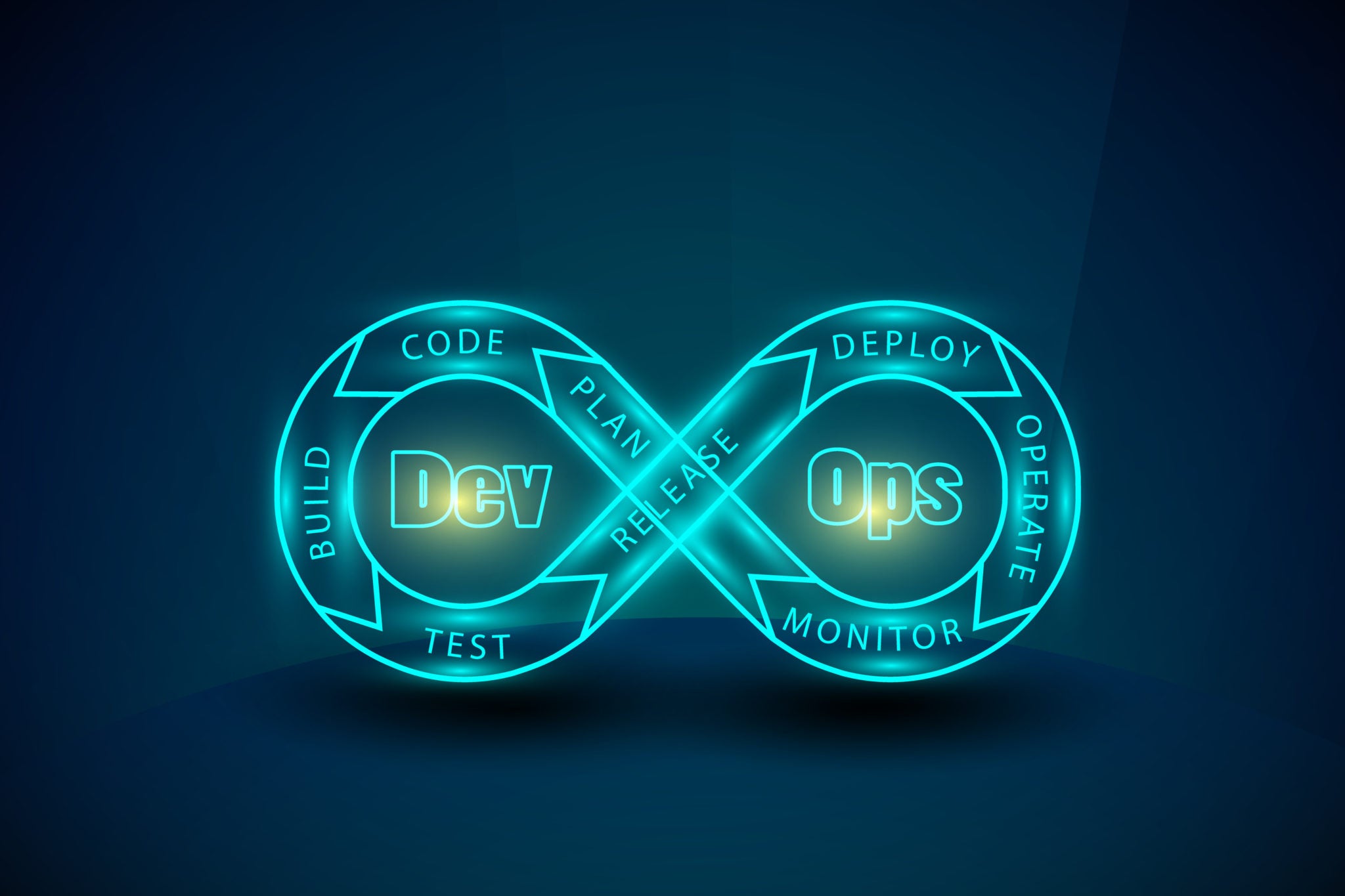

DevOps is all about automation. To fulfil the Agile goal of speeding up delivery of value to the customer, many organisations have already embraced container workflows to support Continuous Integration (CI). Containers are also “all about automation”, so using them to implement Continuous Delivery (CD) into production is the next logical step.

Containers deliver automation through the application of an increasingly popular paradigm called Infrastructure as Code (IaC.) which has become a fundamental DevOps practice used to manage cloud age infrastructure. The infrastructure in question can include virtual machines, cloud, server automation, and software-defined networking; and most importantly to this article’s topic, containers. Along with the application code, users can now check in a file to the source code control system that specifies an environment, configuration and access to the relevant application. With the popular Docker container system, this is called a Dockerfile and contains all the information necessary to create a container image: in other words, a tiny virtual machine that can be automatically built and made operational.

Without containers, an organisation’s DevOps automation might stop at the gate of its production infrastructure, requiring engineers to move new applications out onto hosts (whether manually by copying or by using tools), and of course, make sure all the dependencies for the new application are present on the VMs. With containers, a relatively few simple commands in a file generate literally everything needed to create the “self-contained” production environment and run the new code.

Challenges of containers in DevOps

With all the new tools available to support container deployments, the biggest challenge may be transforming the architecture to allow legacy applications to work in containers, while at the same time building new applications based on microservices.

When DevOps teams start adopting containers, an obvious interim strategy is to use existing VMs images and automation tools to create workflows that copy the VMs and containers across VM hosts. The challenge is to make sure the VMs are optimised for container execution. Benchmarking performance of VMs at scale needs to be included, to understand capacity.

Next, think about how network resources might come under stress when running containers in production, due to large containers, or many container files, moving throughout the network. So, network configurations and monitoring processes and tools must be able to support a dynamic environment, where containers are being spun up or down, particularly in large-scale DevOps projects.

Earlier we talked about the short-lived nature of containers inherently making them more secure. Beyond that “free” benefit, there are some container security ‘best practices’ to consider. To start, containers running application instances should be provisioned with no privileges, and then made useful by carefully adding only the bare minimum functionality and access rights. This helps to mitigate unnecessary levels of user access, which can lead to greater vulnerabilities.

Whitelist rules can be applied to container network interfaces. Restrict container-to-container communication. Monitor each container’s disk and memory usage quotas, and use automation to destroy any that break rules. Containers should be deployed to production from a trusted, read-only repository, and further secured by encryption. Enterprises may well be able to implement these strategies with existing tools.

Right skills, right tools

Managing containers demands a cross-function team equipped with modern skills and tools. There is no ‘one size fits all’ recipe. Fortunately, there is a vibrant community around containers, with plenty of individuals willing to share their resources and knowledge, particularly around the Docker ecosphere.

Plus, many of the tools used for years in traditional software environments can also be used to support the use of containers along the DevOps pipeline. For example, version control systems (VCSs), can be used to support demanding production environments to version Docker files that describe container images, or versioning of the images themselves. Application lifecycle management and Agile planning tools can help plan, manage and test all the DevOps activities related to building, deploying, monitoring and maintaining the entire infrastructure. Newer tools like Google’s Kubernetes, now an open-source system for automating deployment, scaling and management of containerized applications, are making things both more robust and simpler, too, especially for projects at scale.

Containers have a huge amount of potential to transform software development, test and production environments, particularly where DevOps is involved. What’s important to keep in mind is the need for rigorous capacity planning, managing network resources and robust security, particularly ‘at scale’. The good news is that while there are multiple challenges in this still-evolving technology area, there is already a wealth of experience, expertise and tools that will make the job of successful container adoption so much easier.