Nvidia and AMD have both announced new AI chips for desktop computers. Nvidia is releasing three new graphics cards featuring tensor cores capable of running generative AI applications, while AMD’s new processor is combined with a neural processing unit (NPU) designed to handle AI tasks on desktop computers – a world-first, according to the company.

Rising demand for AI chips

The news comes amid rising demand for AI chips throughout the PC market, as interest in running generative AI applications off the cloud continues to rise. For example, Nvidia claims that its new graphics cards – the RTX 4060 Super, RTX 4070 Ti Super and the RT 4080 Super – boost large language model (LLM) processing speeds by up to five times, and AI video generation by up to 150%. “With 100 million RTX GPUs shipped, they provide a massive installed base for powerful PCs for AI applications,” said Nvidia’s senior director of product management, Justin Walker, at a press conference.

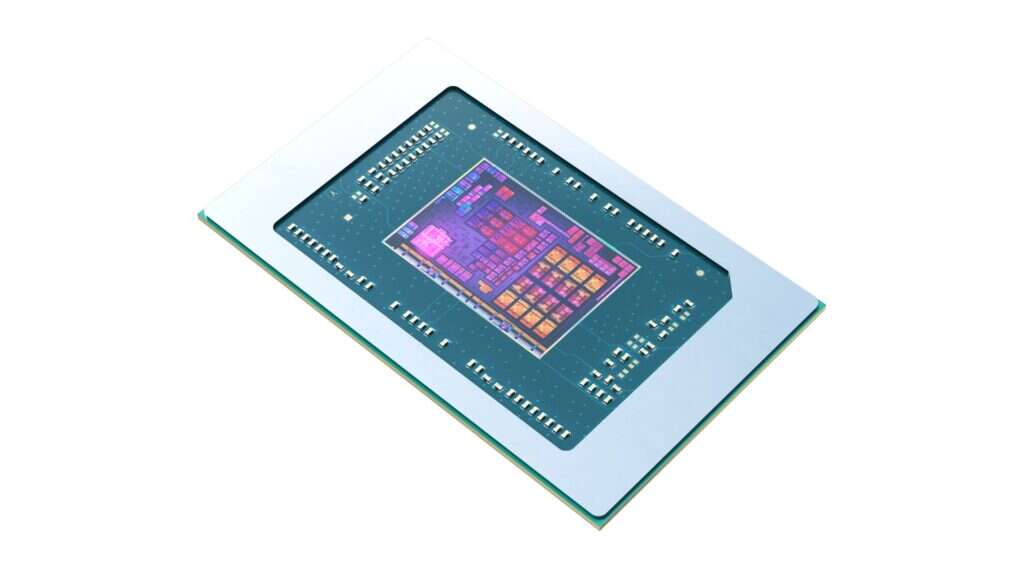

For its part, AMD’s new Ryzen 8000G series boasts eight cores, sixteen threads and an NPU designed to accelerate and optimize AI software applications and workloads. “AMD continues to lead the AI hardware revolution by offering the broadest portfolio of processors with dedicated AI engines in the x86 market,” said AMD’s general manager for its computing and graphics group, Jack Huynh. Though NPU-like components have previously been included in laptops, AMD claims that it is the first company to include them in desktop PC processors.

AI PC era in the offing?

Both statements from AMD and Nvidia said that their respective product releases would help usher in the era of the “AI PC,” a concept wherein advanced AI models can be run on normal consumer and office computers. Currently, most generative AI applications are run in the cloud, though exceptions to this rule have emerged in recent months. Apple, for example, runs foundation models for voice modelling and transcription on the iPhone. Qualcomm, meanwhile, announced its development of processors capable of running AI applications on Windows laptops in May 2023.

Even so, the market has yet to produce a PC capable of running all types of AI applications locally. For its part, Nvidia’s new GPUs will still rely on cloud computing to conduct heavy-duty processing. “Nvidia GPUs in the cloud can be running really big, large language models and using all that processing power to power very large AI models, while at the same time, RTX tensor cores in your PC are going to be running more latency-sensitive AI applications,” Walker told journalists yesterday.