AMD has announced its release of a new AI chip. Named the Instinct MI300X, the semiconductor combines three distinct layers of silicon to deliver what its manufacturer claims is a 3.4-fold increase in speed for machine-learning calculations. Meta, OpenAI and Microsoft confirmed that they would all utilise the new chip as they seek alternatives to Nvidia’s range of graphic processing units (GPUs) that currently dominate the AI chip market.

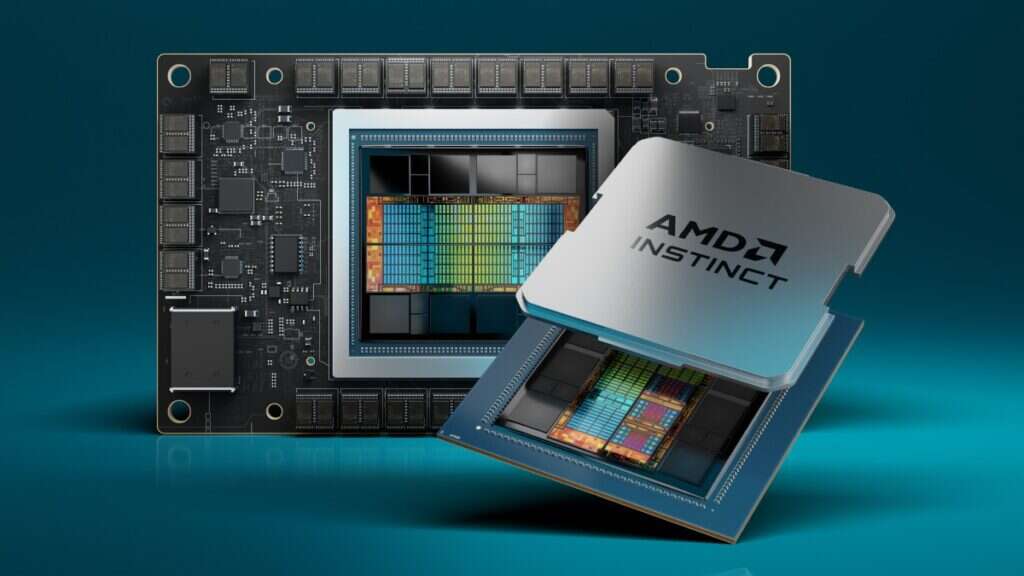

The Instinct MI300X incorporates several types of smaller chips to deliver performance improvements on its predecessors, AMD claims. The new semiconductor layers three CPU chips and six accelerator chips on top of four input-output dies, which in turn are connected via another piece of silicon to eight Dynamic Random Access Memory (DRAM) stacks. The Instinct M300X is also capable of delivering 192GB of high-performance “HBM3” memory, allowing it to support larger AI models than its predecessors. As such, claimed AMD vice-president Sam Naffziger, the Instinct MI300 delivers “the highest density performance that industry knows how to produce at this time”.

Machine learning pioneers including OpenAI and Meta immediately expressed an interest in using the chip, with analysts speculating that both companies were keen to diversify their chip supply away from Nvidia. The Santa Clara-based manufacturer currently portrays itself as the “World Leader in Artificial Intelligence Computing” and boasts an estimated 70% market share in AI chips – though it will doubtless be troubled that Microsoft and Meta, two of its most important customers, have said that they will make ample use of AMD’s MI300X chip.

AMD’s MI300X versus in-house chips

Some doubt as to whether AMD is capable of breaking its rival’s stranglehold on the sector, however, was injected by its chief executive Lisa Su, who conceded that it “takes work to adopt AMD”. Even so, she added, ending Nvidia’s dominance of the AI chip market isn’t AMD’s goal. As far as that overall market is concerned, Su told reporters, “we believe it could be $400bn-plus in 2027, and we could get a nice piece of that.”

How large a slice will be dependent on how enthusiastic traditionally large buyers of AI chips remain in striking deals with external suppliers. Despite expressing enthusiasm for AMD’s product portfolio, many of its best customers are increasingly keen on building their own AI chips. Last month, for example, Amazon Web Services (AWS) followed its competitors Microsoft Azure and Google Cloud in announcing the release of its Trainium2 chip for use in training new AI models.

The financial markets do not seem to share in this scepticism. Shares in AMD spiked by almost 10% after its announcement of the Instinct MI300, contributing to a good day of trading on the Nasdaq, already buoyed by news of Google’s latest “Gemini” AI model. “Today it’s an AMD-Google rally,” Infrastructure Capital Management’s Jay Hatfield told Reuters. “We’re kind of in this weird market, a tag-team market, where one day tech leads, and then the next day value and the broad market lead.”