Intel has released its third-generation “Cooper Lake” family of Xeon processors — which the chip heavyweight claims will make AI inference and training “more widely deployable on general-purpose CPUs”.

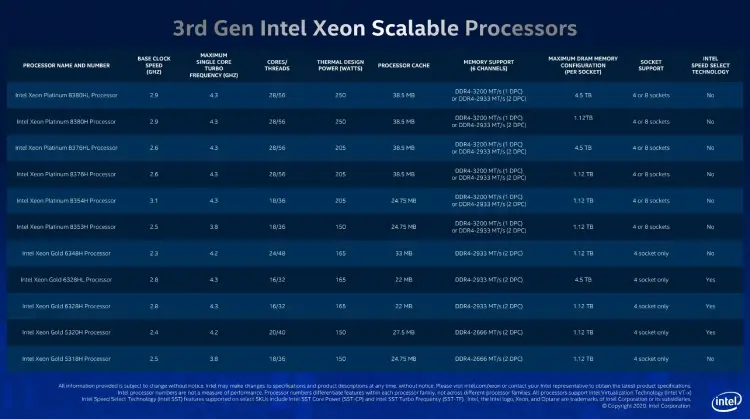

While the new CPUs may not break records (the top-of-the-range Platinum 8380H* has 28 cores, for a total of 224 cores in an 8-socket system) they come with some welcome new capabilities for users, and are being welcomed by OEMs keen to refresh their hardware offerings this year.

The company claims the chips will be able to underpin more powerful deep learning, virtual machine (VM) density, in-memory database, mission-critical applications and analytics-intensive workloads.

Intel says the 8380H will offer 1.9X better performance on “popular” workloads vis-a-vis five-year-old systems. (Benchmarks here, #11).

It has a maximum memory speed of 3200 MHz, a processor base frequency of 2.90 GHz and can support up to 48 PCI Express lanes.

The Cooper Lake chips feature something called Bfloat16″: a numeric format that uses half the bits of the FP32 format but “achieves comparable model accuracy with minimal software changes required.”

Bfloat16 was born at Google and is handy for AI, but hardware supporting it has not been the norm to-date. (AI workloads require a heap of floating point-intensive arithmetic, the equivalent to your machine doing a lot of fractions; something that’s intensive to do in binary systems).

(For readers wanting to get into the weeds on exponent and mantissa bit differences et al, EE Journal’s Jim Turley has a nice write-up here; Google Cloud’s Shibo Wang talks through how it’s used in cloud TPUs here).

Intel claims the chips have been adopted as the foundation for Facebook’s newest Open Compute Platform (OCP) servers, with Alibaba, Baidu and Tencent all also adopting the chips, which are shipping now. General OEM systems availability is expected in the second half of 2020.

Also new: The Optane persistent memory 200 series, with up to 4.5TB of memory per socket to manage data-intensive workloads, two new NAND SSDs (the SSD D7-P5500 and P5600) featuring a new low-latency PCIe controller, and teased: the forthcoming, AI-optimised Stratix 10 NX FPGA.