Google claimed a breakthrough in processor speed this week when it released research showing an AI supercomputer powered by its in-house tensor processing units (TPUs) offers improved performance and better energy efficiency than an equivalent machine running on Nvidia A100 GPUs. Nvidia has cashed in on the generative AI boom, with demand for the A100, the chip used to train large language AI models like OpenAI’s GPT-4, going through the roof. But with a new GPU, the H100, ready to hit the market, it is unlikely to be worried by Google’s achievement.

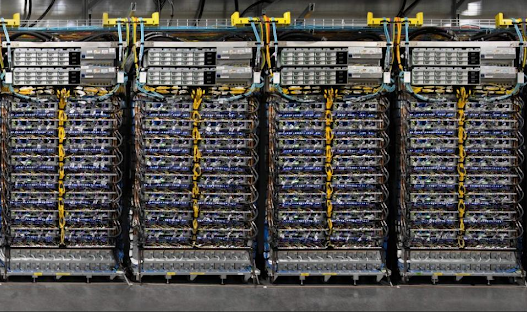

The research paper, published on Tuesday, shows that Google has strung together 4,000 of its fourth-generation TPUs to make a supercomputer. It says the machine is 1.7 times faster than an equivalent machine running on Nvidia A100 GPUs, and 1.9 times more efficient.

Why Google’s TPUs are more efficient than Nvidia A100

In the scientific paper, Google’s researchers explain how they connected the 4,000 TPUs using optical circuit switches developed in-house. Google has been using TPU v4 in its own systems since 2020, and made the chips available to customers of its Google Cloud platform last year. The company’s biggest LLM, PaLM, was trained using two 4,000 TPU supercomputers.

“Circuit switching makes it easy to route around failed components,” Google fellow Norm Jouppi and Google distinguished engineer David Patterson explained in a blog post about the system. “This flexibility even allows us to change the topology of the supercomputer interconnect to accelerate the performance of a machine learning model.”

This switching system was key to helping Google achieve a performance bump, says Mike Orme, who covers the semiconductor market for GlobalData. “Although each TPU didn’t match the processing speed of the best Nvidia AI chips, Google’s optical switching technology for connecting the chips and passing the data between them made up the performance difference and more,” he explains.

Nvidia’s technology has become the gold standard for training AI models, with Big Tech companies buying thousands of A100s as they attempt to outdo each other in the AI arms race. The OpenAI supercomputer used to train GPT-4 features 10,000 of the Nvidia GPUs, which retail at $10,000 each.

But the A100 is about to be usurped by the company’s latest model, the H100. The recently launched chip topped the pile for power and efficiency in inference benchmarking tests released today by MLPerf, an open AI engineering consortium which tracks processor performance. Inference is the speed at which an AI system can carry out a task once it is trained.

“Nvidia claims [the H100] is nine times faster than the A100s involved in the Google comparison,” Orme says. “That speed premium would eliminate the edge Google’s optical interconnect technology provides. The battle of odious comparisons intensifies.”

What are Google’s ambitions in AI chips?

Google says it uses TPUs for 90% of its AI work, but despite the capabilities of the chips, Orme does not expect the tech giant to market them to third parties.

“There is no ambition on Google’s part to compete with Nvidia chips in the merchant market for AI chips,” he says. “The proprietary TPUs will not make it out of the Google data centre or its AI supercomputers if they were ever intended to do so.”

He adds that very few people outside the company will get to utilise the technology, as Google Cloud is a relatively minor player in the public cloud market. It holds 11% of the market according to figures from Synergy Research Group, trailing in the wake of its hyperscaler rivals Amazon’s AWS and Microsoft Azure, which have 34% and 21% shares respectively.

Google has also done a deal with Nvidia which will see the H100 made available to Google Cloud customers, and Orme says this reflects the fact that Nvidia’s place as the market leader will remain secure for some time to come.

“Nvidia is likely to remain the AI chip kingpin in a market that will reflect the feverish excitement surrounding generative AI as spending soars on training and inference capacity,” he adds.