Cerebras Systems has announced the creation of the world’s “fastest” AI chip.

The Wafer Scale Engine 3 (WSE-3) has been purpose-built to train the largest AI models up to ten-times larger than GPT-4 and Gemini. The chip contains 4 trillion transistors, each of which is just 5nm in size.

“When we started on this journey eight years ago, everyone said wafer-scale processors were a pipe dream,” said Andrew Feldman, CEO and co-founder of Cerebras Systems, upon WSE-3’s unveiling. “WSE-3 is the fastest AI chip in the world, purpose-built for the latest cutting-edge AI work, from mixture of experts to 24 trillion parameter models. We are thrilled to bring WSE-3 to market to help solve today’s biggest AI challenges.”

A chip to power scalable computers

Cerebras builds computer systems for AI deep learning applications. Its new CS3 supercomputer, the third iteration of the only commercial wafer-scale AI processor, is powered by the WSE-3.

Feldman said in a press briefing that the new chip, compared with the WSE-2, promises “twice the performance, same power draw, same price, so this would be a true Moore’s Law step”, referring to the rule proposed by Gordon Moore that chip circuitry doubles nearly every two years.

WSE-3 was built on on Taiwan Semiconductor Manufacturing Co’s 5nm manufacturing process and powers the Cerebas CS-3 AI supercomputer with 125 petaflops and 900,000 AI optimised compute cores on a single processor. Each core is independently programmable for operations that underpin the neural network training and inference for deep learning.

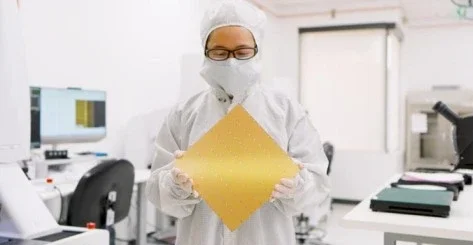

Cerebras’ AI chips compete with Nvidia’s advanced hardware that enables OpenAI to power ChatGPT. Unlike Nvidia and other competitors who slice a large TSMC wafer into smaller segments to make their chips, Cerebras keeps the wafer intact, meaning the final chip is a foot-wide in width. WSE-3 is 56-times larger in physical size than the NVIDIA H100.

What can the fastest chip do?

The CS-3, the computer built to contain the WSE-3, has been intentionally designed to train the next generation of large language models (LLMs), delivering more compute performance utilising less power and space. A one-trillion parameter model on the CS-3 can be trained in the same way that a one-billion parameter model can be on GPUs.

These chip properties will enable accelerated productivity in training AI models for enterprises and hyperscalers. Training larger AI models will vastly increase the capabilities of AI to be applied in complex areas of industry such as healthcare and science.

Better performance without more power consumption

As the world undergoes rapid digital transformation, power consumption has become a challenge for businesses amid rising costs to build and run AI applications, as well as pressure from sustainability regulations necessitating better energy management. Cerebras’ chip promises increased performance without increased power uptake.

The company is also partnering with Qualcomm, optimising the output from the CS-3 to cut inference costs with co-developed technology. The two companies have applied sparsity, speculative decoding, and MX6 compression to the training stack on the CS-3. Through this, Cerebras is able to provide inference-target-aware output of the training process, lowering the cost of inference by ten times. This means it can construct an offering to sell the WSE-3 systems with Qualcomm AI 100 Ultra chips that offers a much higher performing optimised solution for customers.

Feldman said the startup has a “sizeable backlog of orders for CS-3 across enterprise, government and international clouds”.