OpenAI has launched a new LLM named GPT-4o and a desktop version of ChatGPT for macOS. The new model, said its creators, is faster and more efficient at parsing text, audio and video than its predecessors. GPT-4o also allows ChatGPT to process up to 50 languages at greater speeds. The new model is accessible to developers through OpenAI’s API and to free and premium users of ChatGPT.

“GPT-4o reasons across voice, text and vision,” said OpenAI’s Mira Murati. “With [its] incredible efficiencies, it also allows us to bring GPT-4-class intelligence to our free users. This is something that we’ve been trying to do for many, many months, and we’re very, very excited to finally bring GPT-4o to all of our users.”

New emotion recognition capabilities for GPT-4o version of ChatGPT

OpenAI also highlighted the new voice and vision perception capabilities of ChatGPT powered by GPT-4o. In a demonstration by OpenAI’s head of post-training, Barret Zoph, the chatbot was shown to successfully parse a linear equation on a sheet of paper and correctly answer questions about how to solve it – a notable advance in its own right, given the historical difficulty LLMs have had in solving mathematical conundrums.

A large part of the presentation was also devoted to showing how these new capabilities might be used to enhance the ability of ChatGPT to detect emotion. In a slightly halting demonstration, the firm’s head of frontiers research Mark Chen showed how the model could be interrupted and could pick up on emotional cues from the user. “When I was breathing super-hard there, it could tell and it knew, ‘Hey, you might want to calm down a little bit,’” said Chen. “Not only that, though, the model is able to generate voice in a variety of different emotive styles [with] a wide dynamic range.”

ChatGPT’s visual perception has also been enhanced by GPT-4o, claims OpenAI. Another demonstration by Zoph highlighted how the model can be used to interpret facial expressions to divine insights about the user’s emotional state from photos or video footage.

GPT-4o’s capabilities in this area should be welcomed, said Sean Betts, Omnicom Media Group’s Chief Product & Technology Officer. “GPT-4o is the first technology humanity has ever developed that not only understands emotions but can also respond with emotion,” said Betts. “A lot of talk around generative AI chat models has centred around intelligence, but I think we now also need to start thinking about the emotional intelligence of generative AI. This is new territory for us, but one that I think will completely change our relationships with technology from now on.”

GPT Store now open to free users

Live emotion recognition remains a hugely controversial subject among AI researchers and academics, with many disputing the premise that it is possible to fully interpret how an individual is feeling from visual data alone. The implications of promoting GPT-4o’s supposed capabilities in this area were only partially addressed by OpenAI during the presentation, with Murati explaining that the firm was committed to continually working with red teamers and representatives of civil society to root out and resolve problems and ethical dilemmas.

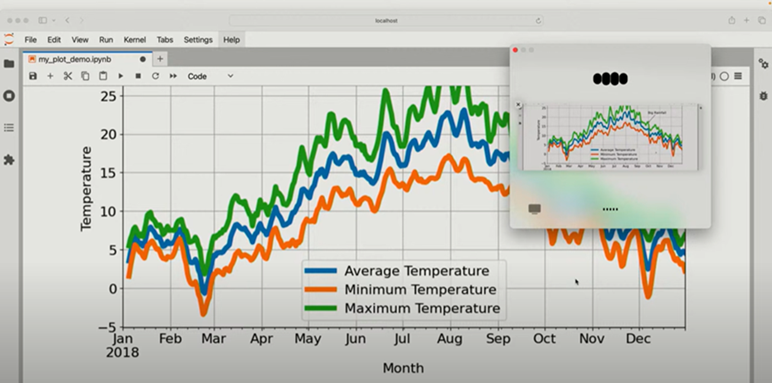

OpenAI also announced that it would be making many premium features of ChatGPT available to free users, including access to its GPT store. The latter allows users to download generative AI applications powered by OpenAI’s LLMs tailored to specific use cases. A desktop version of ChatGPT was also announced, capable of live interpretation of data on the user’s screen. The app is only available to users of macOS, however, with a version for Windows to be released “later this year.”