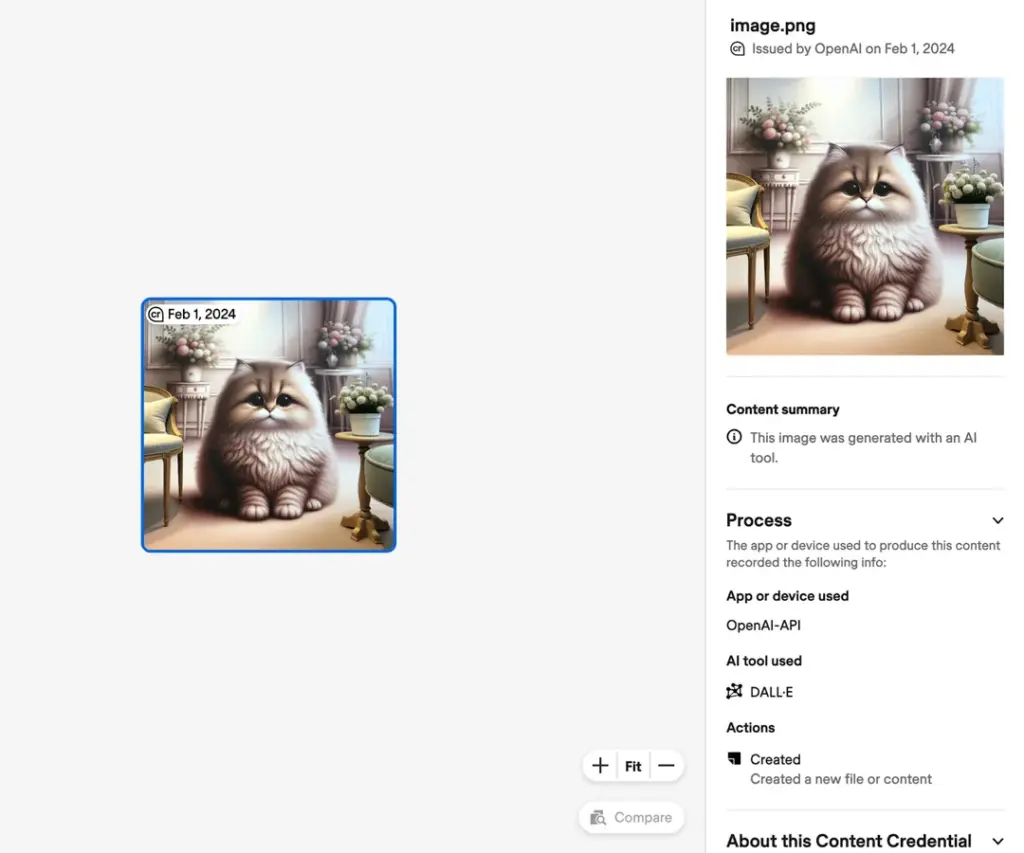

A DALL-E 3 watermark system has been introduced by OpenAI as part of efforts by the tech firm to more firmly establish the provenance of images created by the platform as AI-generated. OpenAI added that invisible metadata will also be added to all generated images on DALL-E 3. Watermarks will be displayed as a visible Content Credentials (CR) symbol in the top left corner of generated images, which will also store metadata invisibly for users to identify the source from which the image was generated.

OpenAI warned users, however, that watermarking was not a “silver bullet” for establishing whether or not imagery was AI-generated. “It can easily be removed either accidentally or intentionally,” the company warned. “For example, most social media platforms today remove metadata from uploaded images, and actions like taking a screenshot can also remove it. Therefore, an image lacking this metadata may or may not have been generated with ChatGPT or our API.”

DALL-E 3 watermarks latest move to prevent AI image misuse

OpenAI’s announcement comes as more companies join the Coalition for Content Provenance and Authenticity (C2PA), a standardising collective including tech giants Microsoft and Adobe. The CR watermarks, which were created by Adobe, will enable users to determine which AI platform an image was generated from to prove whether it was created by a human or AI, which reduces the ability for images to be misused without authenticity and trust. OpenAI claims that adding the watermark metadata to DALL-E 3 images “should have a negligible effect on latency and will not affect the quality of the image generation.” However, file size will inevitably swell due to the added data, with additions in file size from anywhere between 3 and 32% per image.

White House champions AI watermarking in safety campaign

The Biden administration sees the deployment of watermarking as an essential step towards improving AI safety and regulation in the US, to ensure its safe use and reduce the threat of misinformation. 2023 saw President Biden engage in talks with major tech leaders from which commitments to AI safety measures were mapped out. Meta has told the White House that it will apply tags to AI-generated content across its social media platforms as a commitment via Biden’s Voluntary AI scheme, which requires participating companies to prevent misinformation by watermarking AI-generated content. Adobe, Nvidia and IBM are just a few of the companies that are signed up to Biden’s scheme to help clamp down on AI misuse.

“We must be clear-eyed and vigilant about the threats from emerging technologies,” Biden said at a recent White House event. While watermarking may not be an airtight solution for blocking misuse of inauthentic AI-generated content in its entirety, the president nevertheless argued that it was a “promising step” in the right direction.